A partnership between Qualcomm and an AI startup promises lightning-quick AI where it’s really needed: searching through and using your photos and videos as a source of information stored locally on your device.

Right now, the partnership is a foundational one, predicated for the future. Memories.ai is launching what it calls its Large Visual Memory Models 2.0 in partnership with Qualcomm, with an eye toward releasing it in 2026. At that point, the two companies will begin pitching the LVMM to customers who develop their own applications for smartphones, headsets, and PCs.

Could we see a Samsung Gallery on an Android phone, powered by Memories? Conceptually, that’s the sort of relationship that Memories.ai envisions.

People aren’t great about remembering the details of an experience, but a visual can serve as a trigger to unlock the surrounding detail. That’s the metaphor Memories.ai is using, like how the image of a hamburger you ate two weeks ago helps bring back all of the details of what you ate, where it was, and who you ate it with, explained Shawn Shen, the co-founder and chief executive of Memories.Ai. The problem Memories.ai is trying to solve is that machines have learned to be great and recognizing the relationships between words and data, but are much less capable when it comes to imagery.

“Ultimately, memories will win,” Shen said.

Memories.ai develops two pieces of technology: an encoder and the search infrastructure. Memories isn’t actually powering the image or video that you’d pull out or show your friends or family. Instead, it’s capturing a version of the image or video that’soptimized for the information it contains. That data is then passed to the search infrastructure, so that a query like “my group of friends eating dinner in Korea” would return the proper information.

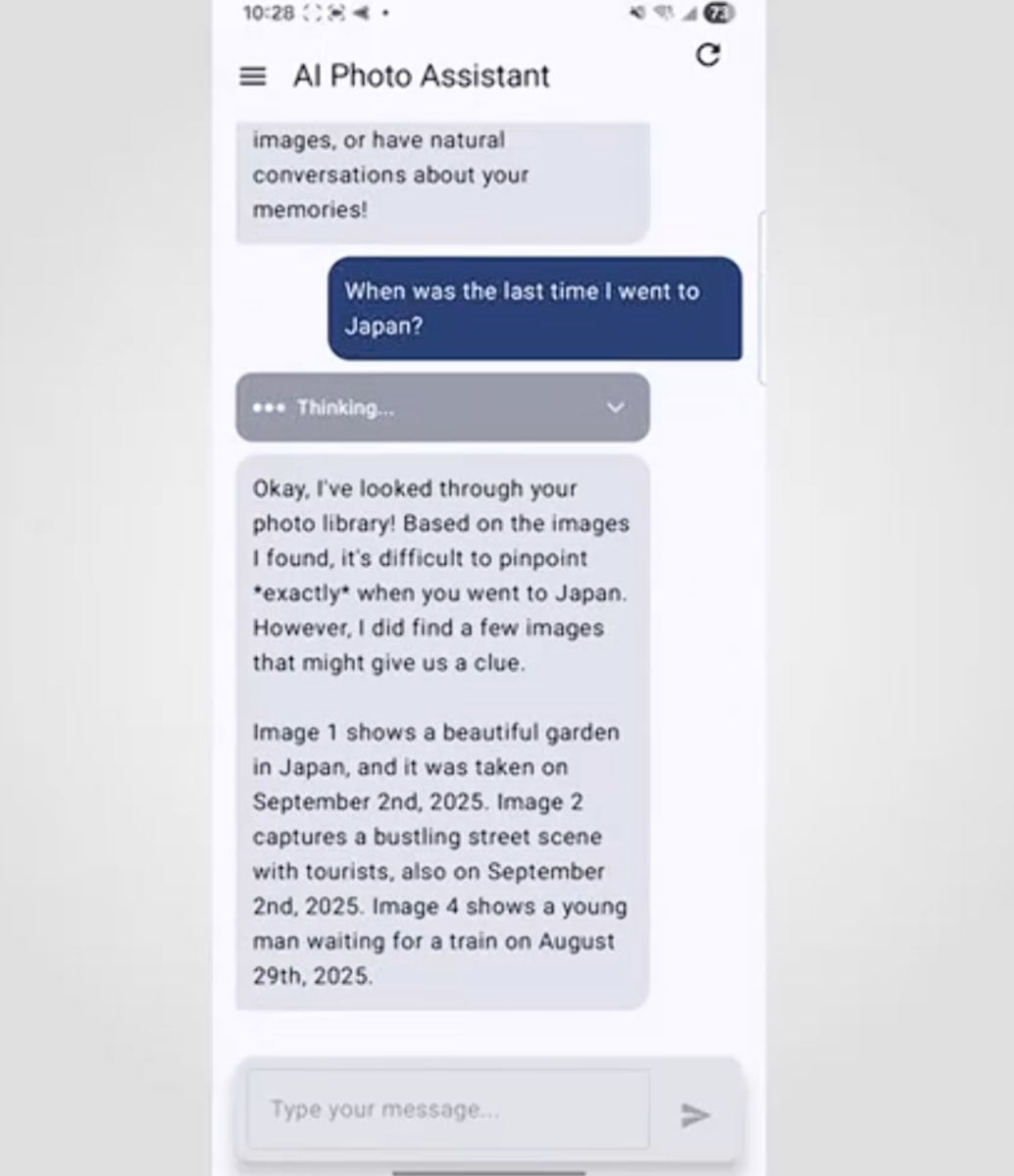

Memories provided a demonstration of their technology below, complete with how videos can be searched and queried using natural language.

Photo search and photo query

The Memories technology is heading in a couple different directions. For now, the partnership seems aimed at simply providing a better version of on-device photo and video search, basically taking something like Google Photos and developing a superior, private version of it. Some built-in photo-gallery apps tag photos with locations or the people they’ve captured; Memories is essentially creating those tags on the fly.

Shen said that the encoding technology could run constantly, culling information gleaned from the real world. It doesn’t sound like that constant recording is a plan for handhelds like Qualcomm’s XR platform for smart glasses or other wearables, however. Instead, that could be a function for a security camera. The second major function of the Memories.ai technology is the ability to “talk to it,” in much the same way Otter.ai’s AI transcription service allows you to ask the service questions about a particular transcript.

“When was the last time the pizza got delivered? What suspicious events happened around my home? When did my dog knock the vase over? You can interact with all your personal media files recorded from cameras by just having this natural language chatting,” Shen said.

Some of this information could be culled from different sources, of course; you could always find out the last time you went to Japan by looking at your calendar or searching out a trip reservation on your email. Memories.ai believes that you’ll find more context than that within a photo or video.

The Qualcomm partnership is the first time that the Memories.ai team has publicly partnered with a chip company for on-device searching.

“This partnership will enable AI platforms that are not only responsive but also context-aware, able to retain visual information, recognize patterns over long periods, and perform reliably even at the edge of networks,” Vinesh Sukumar, vice president of product management and head of generative of AI at Qualcomm, said in a statement. “Together, we are speeding up our shared goal to deliver smarter, more intuitive intelligence to real-world applications.”

Internally, Qualcomm is “super excited” about the partnership, believing that the Memories.ai technology could be used to search within videos and even eventually edit them, employees said. In addition, the Memories model is small enough that it can be run locally on the device, removing the need to be connected to the cloud and also the lag necessary to go back and forth with the cloud while searching.

The partnership isn’t specifically identifying which Qualcomm processors are being targeted, but Shen said that the encoding process is run on the local NPU, and the retrieval is essentially like using the CPU to fetch queries from a database. Qualcomm, of course, launched the Snapdragon X2 Elite PC processor this fall, alongside the Snapdragon 8 Elite Gen 5 for smartphones and other mobile devices.

Eventually, Shen said, Memories plans to design its own application. But for now, Memories and Qualcomm intend to start pitching device makers on building the Memories.ai technology into wearables, phones, and cameras beginning in 2026.